Artificial intelligence is rapidly becoming the backbone of modern manufacturing in the UK, turning traditional factories into intelligent ecosystems. However, as some manufacturers pull ahead with AI, others are stuck in pilot-mode and faced with a multitude of blockers or unwilling to introduce AI all together.

In early October, I attended the Scottish Manufacturing & Supply Chain Conference. As a panellist on the CeeD ‘AI in Manufacturing’ panel, representing technology partners and our views on AI, I was certainly in good company: 14 sessions across the two days were focused on AI topics. Clearly, manufacturers want to embrace AI, but face challenges when it comes to the actual execution.

In this material and hands-on industry, UK manufacturers need the support to finally move beyond ambition to execution and reach new levels of agility (and it’s not just about robotics). As global competition intensifies and customer expectations evolve, manufacturers must rethink how they operate, and AI offers the tools to do just that. The key is knowing just where to start.

How UK manufacturers are using AI today

From predictive maintenance and process automation to robotics and demand forecasting, the transformation is underway. Those who embrace it early are already seeing the rewards. Across the UK, manufacturers are increasingly leveraging AI to reduce waste, optimise energy use (as the cost of electricity is a major challenge), and streamline operations. In practice, this looks like:

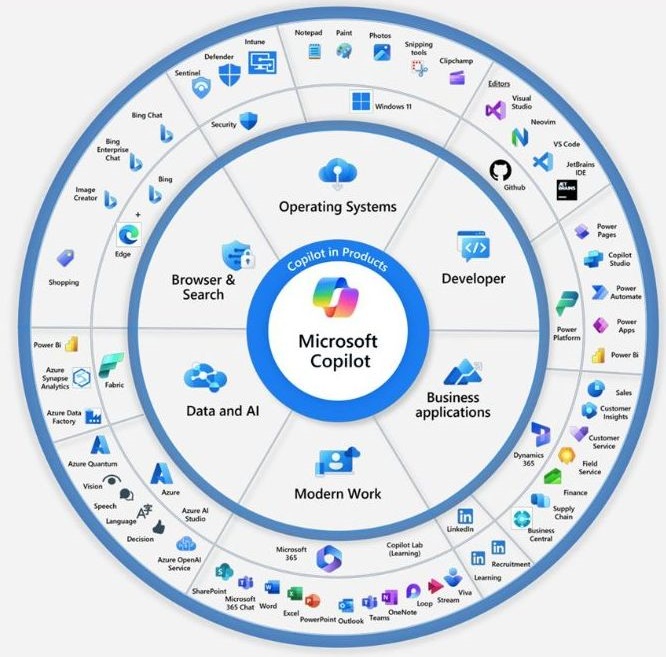

- AI agents (whether that is chatbots, autonomous agents or multiagent systems)

- Vision systems (e.g. laser tagging, quality control checks)

- Image recognition (e.g. stock taking)

- Scanning and 3D printing (e.g. printing spare parts)

- Robotics (e.g. AGVs – automated guarded vehicles – on the factory floor)

Success stories are already emerging: Marks & Spencer has used computer vision to reduce warehouse accidents by 80%, while machine learning is driving smarter inventory management across several large firms. But while the potential is vast, the journey is far from straightforward. Only a small number of manufacturers are currently using AI directly in their production processes.

What’s blocking AI in manufacturing?

AI isn’t a switch that we can just turn on; especially in an industry as ‘boots on the ground’ as manufacturing. Thought is needed into how to apply it properly throughout the organisation. There are countless stories where this depth of planning hasn’t happened, leading to a failed AI project. Common challenges with AI in manufacturing include:

- Misaligned strategy (in other words, AI not tied to business goals and/or lacking executive sponsorship)

- Fragmented or/and outdated data systems that hinder integration

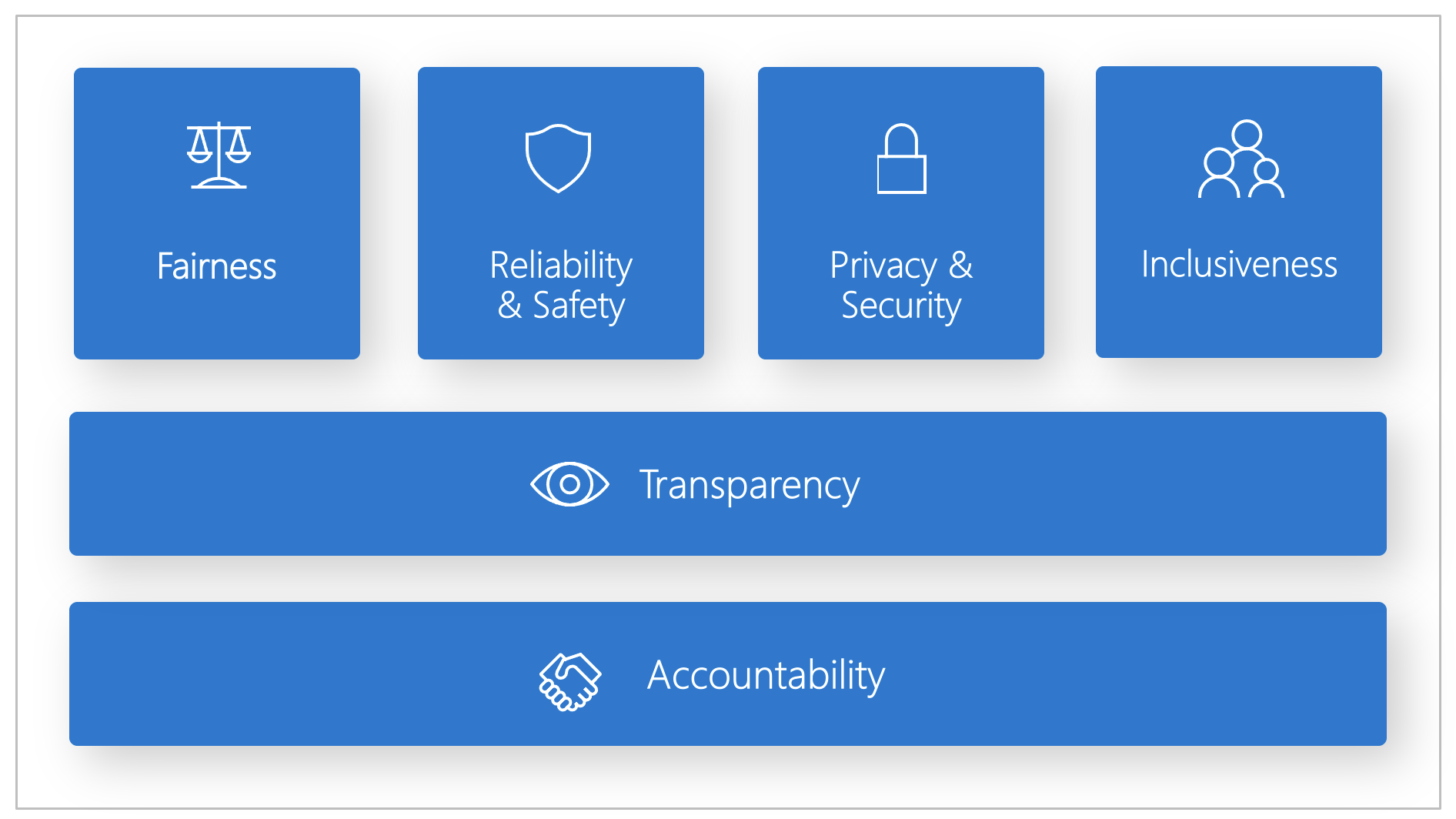

Limited internal expertise (AI fluency), and - Weak governance structures (around data security, compliance and policies)

Regulatory complexity and data privacy concerns add further hesitation, and many manufacturers still lack awareness about AI’s practical applications and benefits. That’s a lot to consider. It’s not surprising that manufacturing is one of the slower industries to adopt AI. Overcoming these blockers will require coordinated efforts across government, industry, and technology providers.

Future challenges to AI adoption

To fully harness AI, manufacturers first and foremost need a skilled workforce and a robust digital infrastructure. This means secure cloud-based platforms for scalable data storage and processing, industrial IoT-enabled machinery (sensors and digital twins) to generate real-time operational data, and upskilling initiatives to empower staff with AI literacy and digital fluency.

In addition, it’s important to remember that AI adoption is 20% technology, 80% transformation. It’s primarily a case of empowering people, not just machines. A quote that stayed with me at the conference was ‘The best manufacturers are not just building products, they are building people’ and workforce skills must be seen as a strategic supply chain. To this end, Artificial Intelligence needs to be seen as ‘Assistive Intelligence’.

Therefore, the importance of change management cannot be overstated. Job roles are evolving and the companies that succeed will be the ones that have a workforce that is able to adapt to changes and have a willingness to upskill. Without these foundations, even the most promising AI tools can fall short.

The role of technology partners

Many manufacturers are stuck in pilot-mode with AI. While the appetite is there, moving beyond ideas to real-world impact takes a leap. As proven by manufacturers like Rolls-Royce, the key to bridging that gap between ambition and execution lies in strategic technology partnerships.

Collaborations with technology partners are not just technical, they are transformational. By digitalising operations, although the initial investment is high, manufacturers gain the ability to predict issues, predict demand, and optimise processes. This allows even small teams to have big leverage and, in some cases, identify new markets and service offerings as a result.

MakeUK research suggests that by 2035, as much as £150bn could be added to the UK GDP by closing the digitalisation gap in Manufacturing. There is no doubt that the future of manufacturing is intelligent, connected, and data driven. Whether it is a large enterprise or an ambitious SME, for most manufacturers now is the time to review how their operations can be modernised, streamlined, and optimised with AI. Technology partners should be there to meet you where you’re at, learn from you and help you break through the barriers unnecessarily holding many manufacturers back.